Multimodal Large Language Models (MLLMs) have significantly advanced embodied AI, and using them to benchmark robotic intelligence has become a pivotal trend. However, existing frameworks remain predominantly confined to single-arm manipulation, failing to capture the spatio-temporal coordination required for bimanual tasks like lifting a heavy pot. To address this, we introduce BiManiBench, a hierarchical benchmark evaluating MLLMs across three tiers: fundamental spatial reasoning, high-level action planning, and low-level end-effector control. Our framework isolates unique bimanual challenges, such as arm reachability and kinematic constraints, thereby distinguishing perceptual hallucinations from planning failures. Analysis of over 30 state-of-the-art models reveals that despite high-level reasoning proficiency, MLLMs struggle with dual-arm spatial grounding and control, frequently resulting in mutual interference and sequencing errors. These findings suggest the current paradigm lacks a deep understanding of mutual kinematic constraints, highlighting the need for future research to focus on inter-arm collision-avoidance and fine-grained temporal sequencing.

BiManiBench is the first hierarchical benchmark specifically designed to systematically evaluate the bimanual coordination capabilities of Multimodal Large Language Models (MLLMs). While current research in embodied AI has made significant strides in single-arm manipulation, bimanual coordination remains a formidable challenge. It requires more than just parallel execution; it demands rigorous spatiotemporal synchronization and dynamic role assignment to navigate complex kinematic constraints and prevent self-collisions. BiManiBench addresses this critical gap by providing a dedicated platform to analyze how foundation models manage the unique complexities of dual-arm physical interaction.

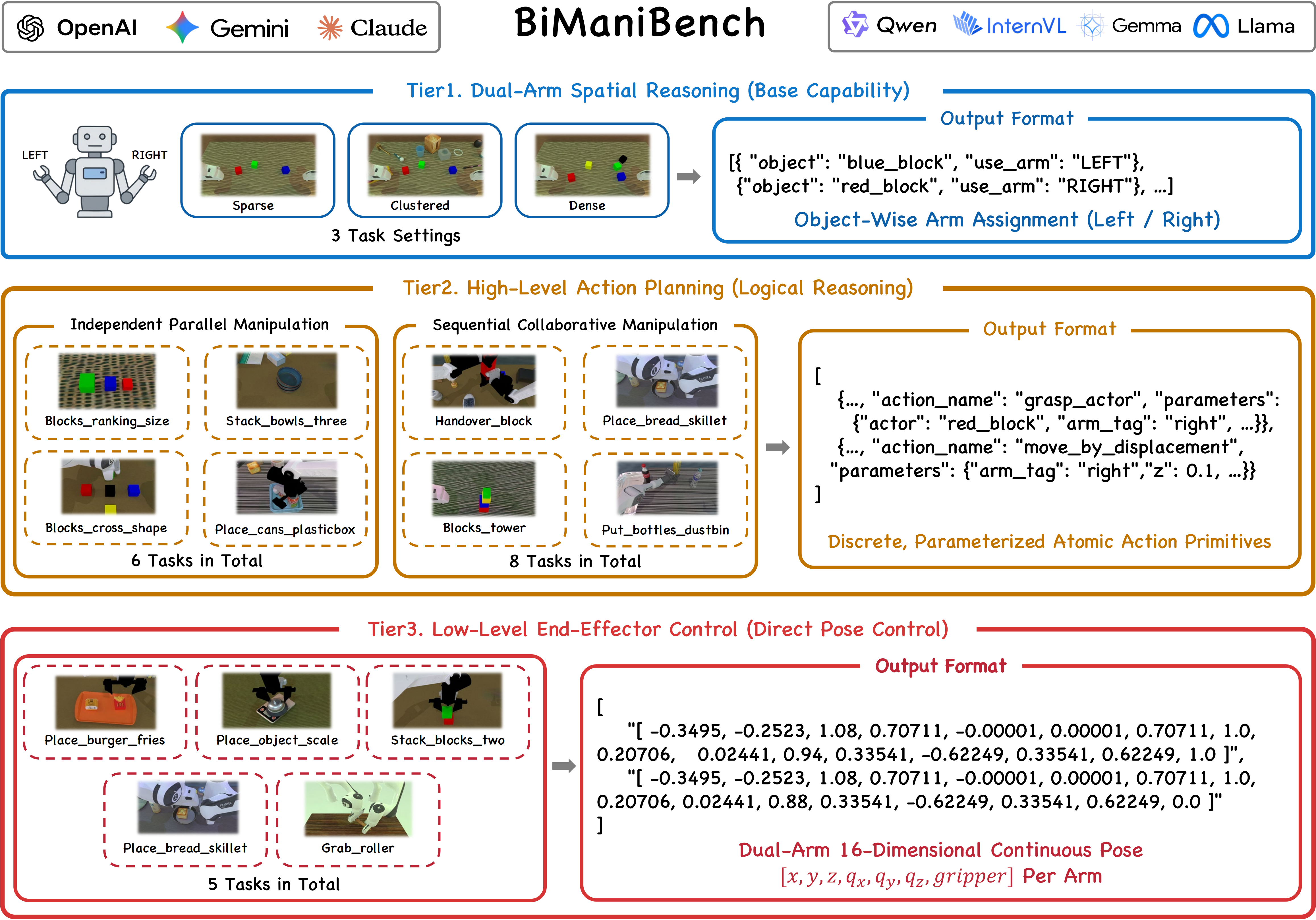

Figure 1: The hierarchical evaluation framework of BiManiBench, deconstructing bimanual coordination into three tiers of abstraction.

As illustrated in Figure 1, our benchmark features a comprehensive three-tier evaluation framework that deconstructs bimanual tasks into different levels of abstraction. Tier 1 (Dual-Arm Spatial Reasoning) assesses fundamental workspace awareness and arm allocation. Tier 2 (High-Level Action Planning) evaluates long-horizon reasoning under diverse coordination modes, including independent parallel tasks and complex sequential collaborative manipulation. Tier 3 (Low-Level End-Effector Control) tests the model’s ability to directly generate fine-grained, 16-dimensional continuous poses for precise bimanual synchronization. This hierarchical design allows researchers to isolate specific failure modes and distinguish between perceptual hallucinations and planning deficiencies.

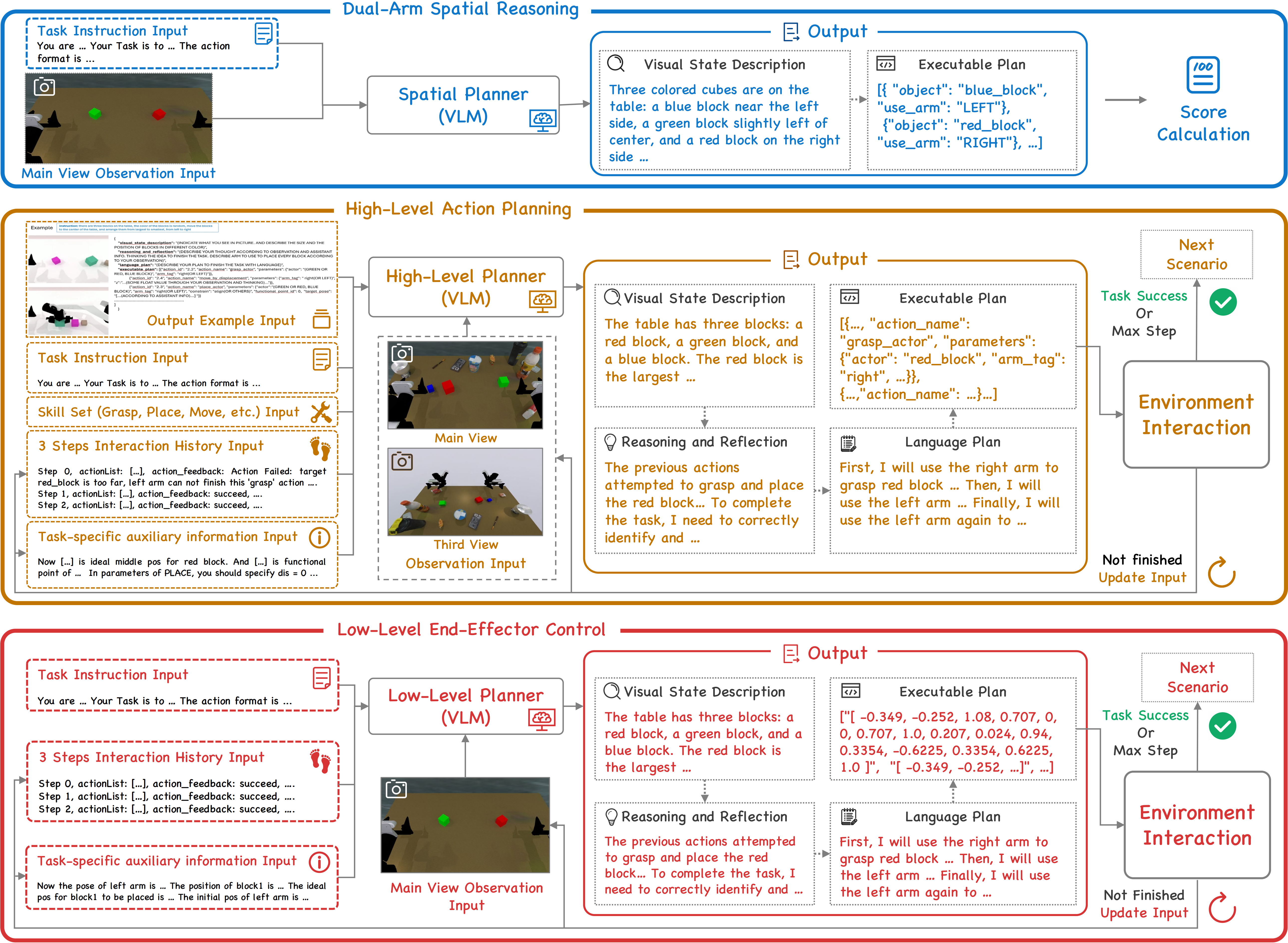

Figure 2: The vision-driven agent pipeline designed for structured multimodal perception and reasoning.

The core of our evaluation is supported by a vision-driven agent pipeline designed for structured multimodal perception and reasoning (Figure 2). The agent processes diverse inputs—including multi-view observations (main and third-person views), language instructions, and task-specific auxiliary information—to bridge the gap between perception and action. Within each planning step, the MLLM functions as a central "brain" that generates a visual state description, performs internal reasoning and reflection, and formulates a language-based plan before outputting a structured, executable JSON format. This iterative closed-loop process ensures that the agent can adapt its coordination strategy based on the evolving environment state.

Through an extensive empirical study of over 30 state-of-the-art models—including proprietary systems like GPT-5, Gemini, and Claude—our results reveal a significant "reasoning-actuation gap." While modern MLLMs demonstrate proficiency in high-level strategic planning, they frequently struggle with fragile spatial grounding and precise dual-arm control. By pinpointing these bottlenecks, BiManiBench provides a foundational framework and diagnostic tool for the community to develop more robust, versatile robotic agents capable of human-like physical coordination.

Coming soon.

@article{wu2026bimanibench,

title = {BiManiBench: A Hierarchical Benchmark for Evaluating Bimanual Coordination of Multimodal Large Language Models},

author = {Wu, Xin and Liang, Zhixuan and Ma, Yue and Hu, Mengkang and Qin, Zhiyuan and Li, Xiu},

journal = {arXiv preprint arXiv:2602.08392},

year = {2026},

url = {https://arxiv.org/abs/2602.08392}

}